The ROI of AI

AI initiatives rarely collapse in obvious ways. There’s no single moment where a model “breaks” or a system stops...

|

DATALAKE

|

|

|

Synaptiq helps you unify structured and unstructured data into a secure, compliant data lake that powers AI, advanced analytics and real-time decision-making across your business.

|

| Read More ⇢ |

|

AI AGENTS & CHATBOTS

|

|

|

Synaptiq helps you create AI agents and chatbots that leverage your proprietary data to automate tasks, improve efficiency, and deliver reliable answers within your workflows.

|

| Read More ⇢ |

|

HEALTHCARE

|

|

|

A startup in digital health trained a risk model to open up a robust, precise, and scalable processing pipeline so providers could move faster, and patients could move with confidence after spinal surgery.

|

| Read the Case Study ⇢ |

|

LEGAL SERVICES

|

|

|

Learn how Synaptiq helped a law firm cut down on administrative hours during a document migration project.

|

| Read the Case Study ⇢ |

|

GOVERNMENT/LEGAL SERVICES

|

|

|

Learn how Synaptiq helped a government law firm build an AI product to streamline client experiences.

|

| Read the Case Study ⇢ |

|

|

Mushrooms, Goats, and Machine Learning: What do they all have in common? You may never know unless you get started exploring the fundamentals of Machine Learning with Dr. Tim Oates, Synaptiq's Chief Data Scientist. You can read and visualize his new book in Python, tinker with inputs, and practice machine learning techniques for free. |

| Start Chapter 1 Now ⇢ |

By: Stephen Sklarew 1 Oct 30, 2025 6:15:11 PM

Contrary to popular perception, most AI failures don’t really look like crashes at all. They look like silence; the AI launches, the dashboards load, and the frontline keeps doing what they’ve always done.

Case in point, a manufacturing client of ours wanted a “quick fix” to a sales forecast the last vendor built but no one used. We urged meeting the people who rely on the forecast upfront; the response was “just improve the model.” Tests looked great: better features, cleaner data, lower error, yet adoption didn’t budge. After go‑live, the real issue surfaced: the user interface didn’t fit how revenue leaders prefer to review demand, controls felt foreign, and graphs lagged. The model wasn’t the blocker; lack of focus on the user experience was.

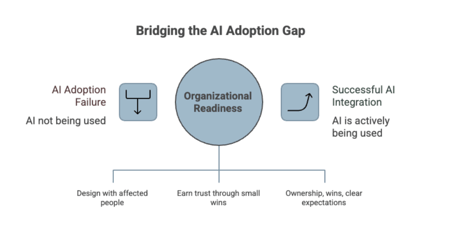

So, as you can see, this isn’t a data or model problem; it’s an organizational readiness problem: the change never took root because the people most affected were never brought into the story, the workflow, or the win. Simple.

Despite billions being poured into AI, the adoption gap persists because leaders over-index on technical milestones and under-invest in human systems: trust, incentives, governance, and feedback, where adoption is actually won or lost.

So, what’s the real unlock? As you’ll discover in this article, we ought to be treating AI as a transformation of decisions, roles, and culture, not as a tool installation, and make change management the first mile, the middle mile, and the last mile of the journey.

I’ve seen too many AI initiatives clear the “it works” bar but miss the “it’s used” bar (there’s a world of difference, mind you!), so value stays theoretical and pilots never scale beyond the innovation sandbox.

Under the surface, the root causes are predictable: cultural resistance masquerading as “it’s not accurate enough,” brittle trust in probabilistic outputs, pilot paralysis that fixes nothing at scale, and leadership narratives that oversell potential while underpreparing people for new ways of working.

The practical formula is always simple: value is a function of strategy, technical excellence, and organizational readiness. So, if readiness is near zero, value trends to zero, no matter how elegant the AI is. I’ve seen this happen so many times I’ve lost count.

Leaders often act as if adoption will follow capability; in reality, adoption follows incentives, role identity, and narrative. Do people feel ownership, see the win, and know what “good” looks like with the new AI tool in their hands? To close the gap, leaders design the change upfront with the people whose work will be changing, then sequence deployment to earn trust through small wins that compound, not big-bang rollouts that overwhelm.

AI isn’t another predictable, rule-based system upgrade. What is it then? AI is a probabilistic copilot that changes how judgment is exercised and how accountability is shared between humans and machines. But you’ll immediately notice some very specific anxieties: fear of redundancy, fear of being second-guessed by “the AI,” and fear of being held accountable for an opaque recommendation whose inner workings are hard to explain to a customer or regulator.

Unlike traditional software development, where requirements are fixed, AI demands continuous iteration: new data increases and inference outputs shift, new edge cases appear, new norms emerge, so the change is ongoing by design.

This is why treating AI as plug-and-play backfires; the job isn’t to “train and install a model,” it’s to re-architect decision flows, redefine roles, and build a trust fabric that can hold evolving probabilistic guidance without eroding confidence or compliance. Leaders who anticipate these dynamics by investing in explainability, re-skilling, and clear decision rights avoid performative pilots and replace them with durable capability building.

AI itself is not a strategy; it can be an enabler of your organizational strategy. Let’s take a look at how you can achieve this.

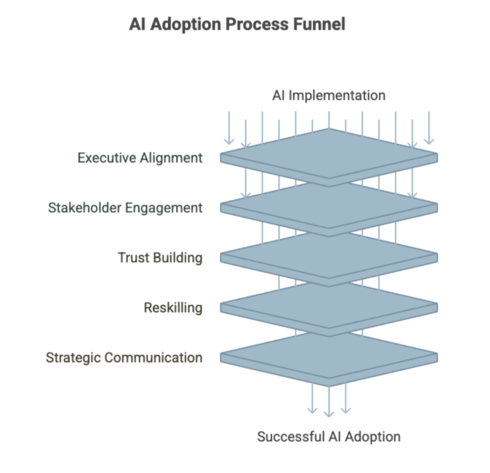

Executive alignment and sponsorship If AI lives only as an IT project, it dies as an orphan. When business leaders frame AI as a strategic enabler and repeatedly tie it to business outcomes, adoption becomes a leadership drumbeat (and not a technical memo). Strong sponsors do three things: set a clear “why now,” remove cross-functional blockers, and celebrate behavior change publicly. Because what leaders recognize becomes what the organization reproduces.

Stakeholder engagement early and often Adoption is easiest when the people closest to the work co-design how AI shows up in their day; business domain experts should shape features, thresholds, and exception pathways, not just test them at the end. Shared ownership beats handoffs: “tech throws AI over the wall at the business” produces resistance, while co-creation produces pride and process fit that survives beyond a pilot.

Build trust by design If there’s one thing I’ve learned over the years, it’s that trust doesn’t come from asking others to “trust me.” It comes from intelligibility: explaining confidence bands, surfacing the top drivers behind a recommendation, and making limitations explicit so people know when to lean in and when to override. A real-world example: We built a customer-facing HR chatbot, but the client hesitated to launch, worried that a wrong answer could damage their brand. The breakthrough was resetting expectations: LLMs may phrase answers differently, but the substance must be governed by guardrails, approved sources, and response policies. Once leaders saw that variability in wording didn’t mean variability in quality, and that data governance could standardize substance, they greenlit rollout. The bot went live under clear controls and quickly reduced handle times without sacrificing trust.

Reskill, don’t just retrain There’s a fundamental difference here. Retraining says “click here, then here”; reskilling elevates roles: analysts become sense-makers, salespeople become risk-aware consultants, operators become exception managers who improve the system over time. Plant “AI champions” in every team: hands-on peers who model the new workflows, surface friction to product teams, and accelerate peer-to-peer learning that no LMS (Learning Management System) can replace.

Communication as a strategic lever Silence breeds rumor, and rumor breeds fear. Build a narrative that is honest about change and concrete about benefits at the role level: time saved, risk avoided, revenue captured. Tailor the message to the audience: what a compliance officer needs to hear is not what a store manager needs to hear. Oh, and repeat it across channels until it becomes the new common sense!

Continuous feedback loops Treat adoption like a product metric: measure usage depth, task completion with and without AI, time-to-value, override rates, and trust sentiment via pulse surveys and targeted interviews. Close the loop by changing both the model and the workflow; if people don’t use the feature, assume the workflow is a misfit, not that the users “don’t get it.” We worked with a large client to train a model that predicted which overdue accounts would pay after a rep email or call, but leadership doubted it was worth deploying. Rather than argue accuracy, the team ran tightly scoped, real-customer trials. The results were decisive: backlog dropped and cash came in faster, giving leaders the confidence to scale. Trust here came from measured outcomes in the wild.

AI maturity without organizational maturity is a mirage; AI models that don’t change behavior don’t create value, and behavior doesn’t change without trust, narrative, incentives, and continuous learning built in from day one.

The uncomfortable question is now on the table: if the code isn’t the problem, what, exactly, will move people, and how fast can you build it into the way work gets done?

In the next article in this series, we will see how today’s winners are designing for people first: co-creating with the operators, measuring adoption like a product, and communicating a clear, honest story about what AI will do, what it won’t (that’s important too, though often overlooked), and how the organization will grow around it.

Let’s Chat. Contact me if you're interested in how to properly utilize AI.

AI initiatives rarely collapse in obvious ways. There’s no single moment where a model “breaks” or a system stops...

December 30, 2025

Generative AI systems rarely fail in obvious ways. They don’t crash outright or announce when something has gone wrong....

December 30, 2025

From Code to Capability: A Practical Demo of Agentic AI in Action

In a recent Synaptiq webinar, Dr. Tim...

December 15, 2025